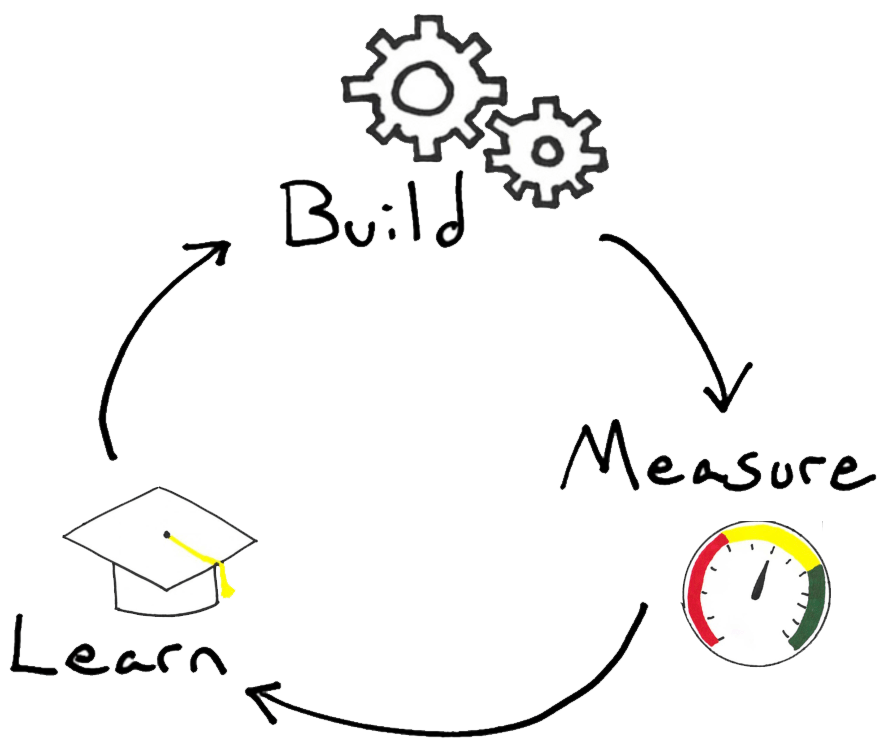

“Go fast” is one of the principles of running a lean startup experiment. The faster we go around the Build-Measure-Learn loop, the faster we validate our business model the sooner we have a business.

(Note: To be accurate, we’re not validating our business model, we’re successfully failing to invalidate it. Correction thanks to Roger Cauvin.)

So how fast is fast enough?

Many teams wonder if they’re moving fast enough. Many accelerator managers and VPs of innovation worry if their teams are running enough experiments or doing enough research. What’s normal?

tl;dr: We should target at least one experiment/research per week.

Knowledge Velocity

In this context, speed refers, not to our ability to produce code or products, but to our ability to learn.

The output of the Build-Measure-Learn loop is knowledge. Click To TweetWe should ideally measure the amount of knowledge coming in and not all knowledge is equal.

Figuring out which of the 41 shades of blue convert best sounds like a lot of experiments, but is that a lot of knowledge? Wouldn’t one experiment that determines if someone is willing to pay for your product be better?

True.

In agile, stories (think of them like tasks if you’re not familiar with agile) are often weighted by difficulty, time estimate or some other method like planning poker. While this seems like a great idea in theory and VPs of innovation love this metric, it’s excessive.

Edit: David Bland pointed out we may not be direct enough here. So we’ll say it more clearly,

Assigning knowledge points to experiments is a terrible idea. cc @davidjbland Click To TweetMeasuring knowledge output sounds important when comparing one team to another or trying to measure the overall knowledge output of 20-100 different teams. However, from the perspective of one team, it’s irrelevant. Here’s two reasons why:

It’s All Relative

We can generally only run 1-2 experiments at the same time, not 20. So it makes no difference how many “knowledge points” that experiment is worth.

Only one question matters: Are we working on the most critical business risk? Click To Tweet If we simply stack rank our assumptions in terms of risk, we should clearly work on the top one! The point value is irrelevant.

If we simply stack rank our assumptions in terms of risk, we should clearly work on the top one! The point value is irrelevant.

We could spend time assigning point values and submitting reports that can’t be realistically compared with risks from a completely different project. Then the VP of Innovation can start discounting point values based on the relative complexity of the business models, but that time is better spent actually doing work.

The only relevant question is, are we working on the most critical business risk?

The answer is binary, yes or no.

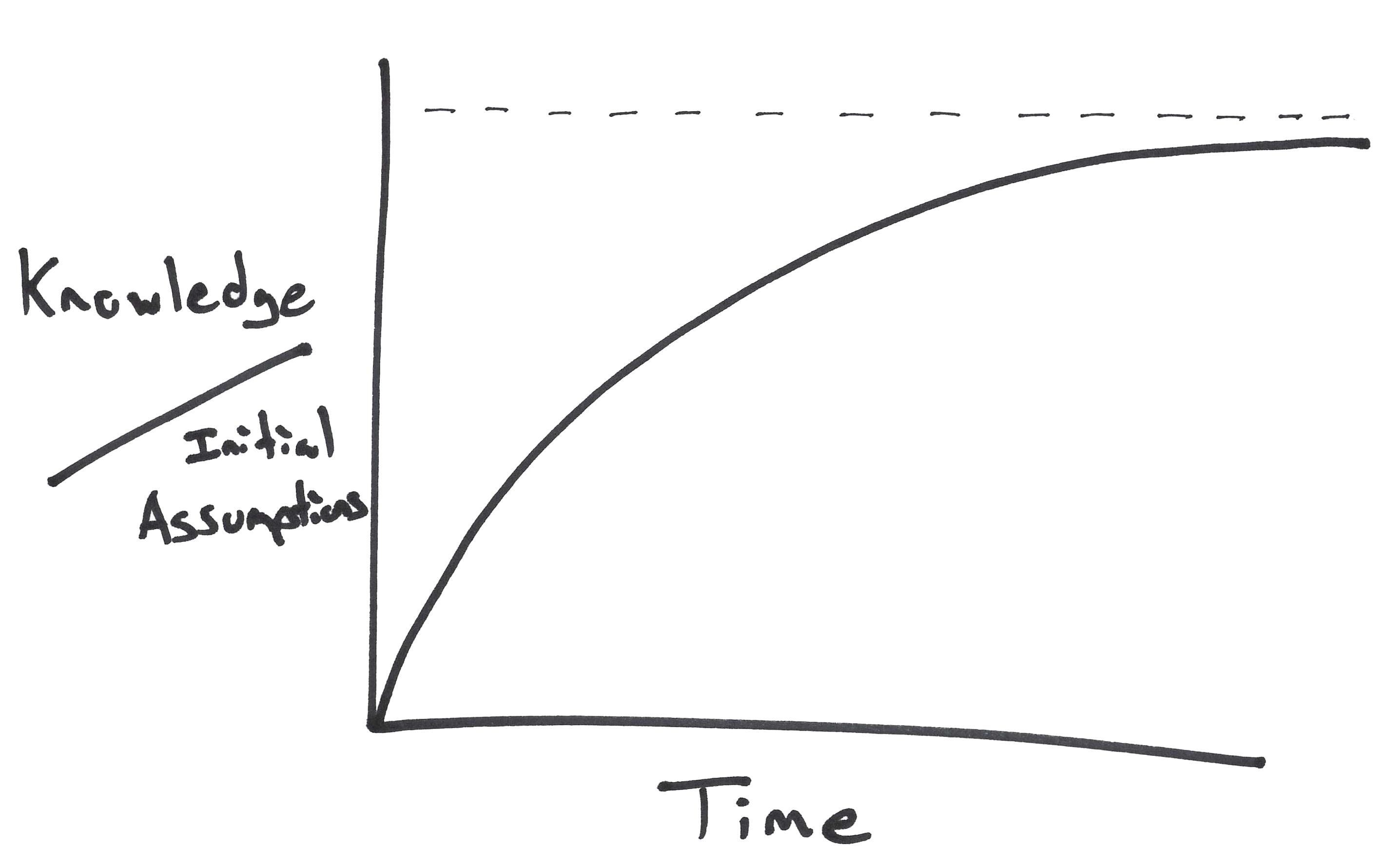

Knowledge Velocity Should Slow Down

In fact, if the team is doing really well and the project is progressing, the knowledge velocity should be getting lower over time.

Why? Because we’ve learned all the important things! The Business Model is solid!

It’s now about optimizing all the little details. So the amount of knowledge we gain each week should be getting smaller and smaller.

In other words, our knowledge to assumption ratio (as Dan Toma is calculating it) should be approaching 1. (At least until the business environment changes or is disrupted, at which point out ratio is probably back to zero.)

Lean Startup Experiment Benchmark – One Per Week

So instead of trying to precisely measuring the number of points of knowledge produced each week, we should just measure our experimental/research velocity. It’s not ideal, but it’s a way of asking, “Are we learning anything about our business model each week?”

So instead of trying to precisely measuring the number of points of knowledge produced each week, we should just measure our experimental/research velocity. It’s not ideal, but it’s a way of asking, “Are we learning anything about our business model each week?”

Benchmarks are tough. A B2B direct sales team would reasonably argue that they will have a slower velocity than a SaaS consumer product which can A/B test their landing page every day.

True. There are hundreds of good reasons why different teams in different verticals with different business models will have different velocities.

But let’s put a stake in the ground and say that

At least one experiment/research per week is a lean startup benchmark. Click To TweetWe will no doubt get hate mail for saying this. “It’s too fast!” “It’s too slow” but here’s why:

Achievable

Firstly, one lean startup experiment per week is achievable for any team in any context.

There will be those teams that say it’s impossible because they have to build really complicated things or their sales cycle is too long.

LIES!

It will take a long time to build!

Most likely, we probably don’t need to build it. Concierge test it, rig the backend with Mechanical Turk and Wizard of Oz test it, did we remember to smoke test it first? There’s always some test that some team members can do while the basic infrastructure is being done.

Most likely, we probably don’t need to build it. Concierge test it, rig the backend with Mechanical Turk and Wizard of Oz test it, did we remember to smoke test it first? There’s always some test that some team members can do while the basic infrastructure is being done.

If it’s truly impossible to run one experiment per week, there is another obstacle in play and it’s most likely an incomplete team or a bureaucracy problem.

Our sales cycle is too long!

This is an identical excuse. Instead of measuring the entire six month sales cycle, we could measure the number of meetings we can set up based on our initial value proposition or even the open rate on our emails. There is a way to run research or an experiment this week.

If one experiment per week sounds like too much, we're doing it wrong. Click To TweetMeasure but Don’t Count

“At least” is a key part of the benchmark. It means that zero experiments is failure, but 3 lean startup experiments per week are not necessarily better than 2.

“At least” is a key part of the benchmark. It means that zero experiments is failure, but 3 lean startup experiments per week are not necessarily better than 2.

This is because “you get what you measure.” As human beings, we’re very good at optimizing for arbitrary metrics. So if we’re forced to count lean startup experiment velocity, it’s pretty much guaranteed that we’ll game the system and run as many teeny tiny experiments as possible.

Then we’re just back to testing 41 shades of blue without tackling our truly risky business assumptions.

Our experience with teams from early stage to enterprise and Japan to Switzerland has been that by setting a benchmark of at least one experiment/research method per week, teams often rake up two, three or more. Setting a fixed goal of ever increasing numbers of experiments tends to lead to smaller and smaller goals or arguments about the validity of the knowledge produced.

(If you have a different experience, we’d like to hear about it. Tweet us.)

Setting a fail condition rather than asking teams to run as many experiments as possible prevents teams from optimizing for number of experiments rather than making genuine business progress. In this way, we measure what matters, but don’t count the number of experiments arbitrarily.

It becomes binary, “Are we learning?” Yes or no.

Setting a fail condition is also good habit.

The Habit of Lean Startup

At least one experiment per week also helps set up a habit. It allows us to have a regular cadence where we can have our sprint planning session, our retro, etc, at the same time every week.

Every other week and it’s ever so slightly harder to build a habit associated with a particular trigger. In this case, the trigger is a day of the week. It’s why we have “casual Fridays” and “Taco Tuesdays.” We don’t have “bi-weekly Taco Tuesdays.”

Every other week and it’s ever so slightly harder to build a habit associated with a particular trigger. In this case, the trigger is a day of the week. It’s why we have “casual Fridays” and “Taco Tuesdays.” We don’t have “bi-weekly Taco Tuesdays.”

Personally, if I don’t have my retro and backlog grooming on Friday, it means my brain is going to churn all weekend and I won’t be refreshed for the week ahead. That’s a good thing.

It means I have an internal motivation and trigger for getting my experiments done. It’s a very satisfying feeling seeing all those Trello cards go in the archive.

Of course, feeling satisfied is hardly a scientific metric, but we’re humans, not robots. (At least for a while longer.)

So we can still ask, “Do we feel we made progress last week?” Yes or no.

Momentum

If you have the momentum of a sloth, going 1 mph would be a huge improvement.

While the benchmark of one lean startup experiment / research per week has been consistently achievable by almost every team we’ve worked with, there is one other consideration: Momentum.

How fast were we going before?

Ultimately, lean startup is about continuous improvement. If our previous velocity was one lean startup experiment per year, then running one per month isn’t too bad.

It’s still not great, but it’s better. So before beating ourselves up too badly, we should just ask, “Are we learning faster?”

If yes…high five and keep going.

Team Checklist

To see how we’re doing we can ask these questions each week:

- Are we working on the most critical business risk?

- Did we run a lean startup experiment or research project?

- Do we feel like we’re making progress?

- Are we learning faster?

Or to put it into commandments, each week we should:

- Prioritize

- Learn something new

- Build good habits

- Continuously improve

Lessons Learned

Lean Startup teams should target at least one research/experiment method per week. Click To Tweet Measuring knowledge velocity is impractical and knowledge is not comparable between teams. Click To Tweet Measure velocity, but don't count and gamify. Click To Tweet Continuous improvement is more important than hitting an arbitrary goal. Click To Tweet

Make better product and business decisions with actionable data

Gain confidence that you're running the right kind of tests in a five-week series of live sessions and online exercises with our Running Better Experiments program. Refine your experiment process to reduce bias, uncover actionable results, and define clear next steps.

Reserve a seat